TrustMLRegulation – Managing Trust and Distrust in Machine Learning with Meaningful Regulation

Finished Project

While there is no lack of both promises and worries, suggestions for meaningful regulation and governance of AI-based applications are still in early stages. While regulation and governance is crucial for managing trust and distrust in Machine Learning (ML), it is unclear how policy implementation would work in reality for different ML methods.

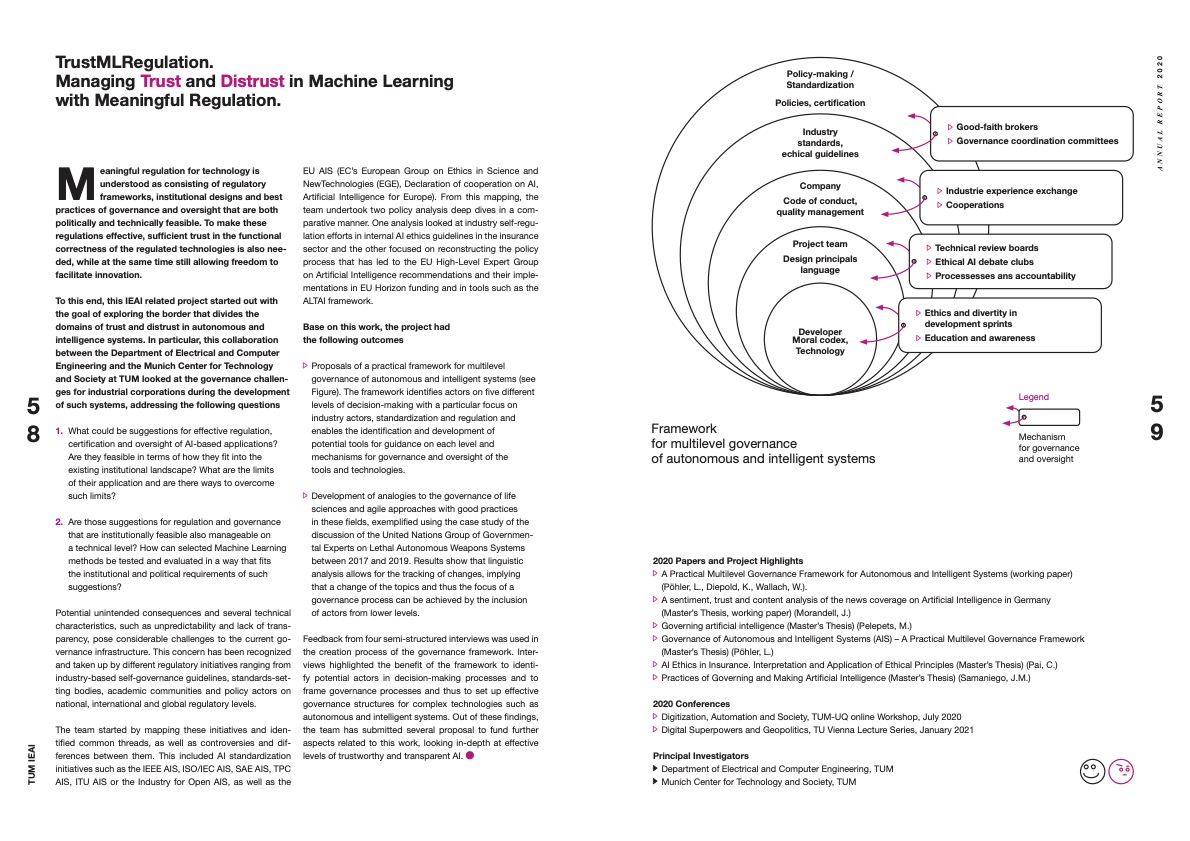

This project will investigate what “meaningful regulation” means on a technical, social and political level by (1) mapping current suggestions for regulation, certification and oversight, (2) evaluating the institutional scope and limits of their application in one exemplary domain (medicine/health) and (3) testing their feasibility for a selected range of ML methods. We employ experimental methods to test conditions of trust and distrust in selected ML outputs and carry out focus groups to understand the scope and limits of these conditions.

Based on these insights, we will select suggestions for regulation and governance that are both institutionally and technically meaningful and ask if and how they can help to manage trust and distrust in ML applications.